About four months ago, our API tests took about 12-15 minutes to run on a decently powered linux machine. Test runtimes on Macs were slightly slower even with comparable hardware. Today it takes us 1 minute and 55 seconds to run the entire test suite including setup & teardown saving us ~50 hours of development time every week.

The number of tests we run for API has more than doubled (from ~2000) since we last talked about it on our engineering blog

Why bother?

Testing and Continuous Integration are core engineering values that we uphold at ClassDojo. This means every commit to master branch is built, tested and deployed to production automatically.

Some advantages of having a fast testing system:

Code deployments are faster: We build & test every commit that goes into our Canary & Production releases automatically. Having a quick build time means we can push out code and in case of bugs, patch things very quickly.

Avoid distractions: Tests are run many times everyday during the course of regular development as well as during deployments. Not having to wait an extra 8 minutes means we can move much faster.

Iterate fast to build products that our users love: When tests run that fast, there is really no incentive to not write tests. This directly impacts the quality of the code we ship and therefore the user experience.

Test run stats

As an example, the week of May 14 saw 130 commits to our main API’s master branch.

- Time saved per test-suite run:

~10 minutes - Max of Average commits to the master branch by day-of-the-week:

40 - Time saved just on Continuous-Integration builds per day:

40*10 = 400 minutes or 6.6 hours - Assuming another

~20total test-suite runs during local development everyday, time saved per week:50 hours/week

The road to super-fast tests

We experimented with running our API inside vagrant VMs for a while. This helped create a standard development environment and avoided the headaches of mismatched dependencies between development and production environments.

We went through a few iterations of speeding up our tests:

Database access speedup

Since our tests make actual database calls, the first step was trying to run our database dependencies in-memory. We got Mongo running in-memory and that saved us between 1.5 and 2 minutes.

However, running an in-memory Mongo setup is fickle, as development machines are sometimes different OS versions and flavours (Ubuntu vs OSX), and auto-mount teardown on OSX doesn’t always play nicely.

Running tests in parallel using Docker

The next optimization was splitting the tests into chunks that ran in parallel and Docker came to the rescue. We found docker images for MongoDB and MySQL that were pre-baked to run with tmpfs and gave us better performance right out-of-the box. tmpfs is a virtual file-system that resides in memory (or your swap partitions) and provides a good speed boost for directories mounted to this file-system. A word of caution: tmpfs data is volatile and all data is lost at shutdown/reboot/crash but this works perfectly for us – no fixture data cleanup required after tests end.

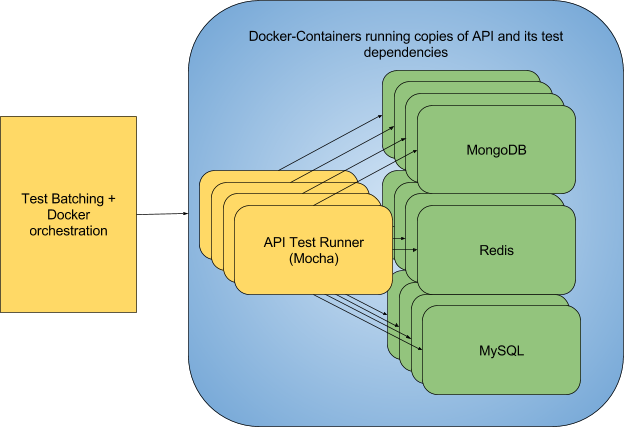

The new setup spins up multiple docker-composed containers and splits our tests into batches that execute in parallel. A simple helper script sorts all the test files by name and splits them up into the desired number of batches. The total run time with this strategy landed at about 12 minutes.

Each group of containers runs the entire API plus its dependencies and executes a batch of test files.

Using yarnpkg to install dependencies

Moving from npm to yarnpkg helped a lot. Time take for a full install of node_modules using yarnpkg:

1 2 3 4 5 6 7 | |

Time taken by npm to install the same set of dependencies:

1 2 3 4 5 | |

At this point we were down to about 10 minutes for running the entire test-suite.

Running a “swarm” of the docker containers in the cloud

The final step towards speeding up our builds was off-loading testing to a powerful instance that runs all of these containers with ease and for multiple developers at the same time. A beefy AWS-EC2 c4.4xlarge instance (with 16 vCPUs, 30 GiB memory) that acts as our API TestBot.

This increased our concurrency from 3 on local developer machines to 6, each running with copies of:

- Mongodb

- Redis

- Mysql

- Mocha tests runner

all executing test batches in parallel as a container-group.

The test-fast workflow:

This is what our testing workflow looks like now:

- As soon as someone issues a

make build-fastcommand, a remote directory is created for them on theAPI TestBotinstance. - Their local api directory is

rsync’dto their personal remoteAPI TestBotdirectory. If they had run tests in the past, rsync is even faster because it only sends the diff. - The Docker orchestration script kicks off and creates the required sets of containers per test batch.

- Currently, a concurrency of 6 works best for us. This creates 6 copies of the API

container-group, running a total of 24 containers per user. - Each container in the group gets named uniquely, including the name of developer running the tests as a prefix, so that setup-and-teardown as well as debugging of the containers is easy.

- Tests run in parallel and if any group encounters a failed test, the orchestration script captures the failure and fails the entire test suite.

Hacks

Async I/O limits

Running lots of tests in parallel lead to flaky tests due to resource contention while running so many copies of the database containers. After some investigation, we found that we were hitting the max Async I/O limits for our kernel.

Increasing it from 262144 to 1048576 with the command: sudo sysctl fs.aio-max-nr=1048576 fixed that problem.

Docker-compose: wait-for-it!

Our API test container also needs to wait for resources (Redis, Mongo & Mysql containers) to become available. Docker-compose’s dependency functionality just waits for the container to start, which isn’t exactly the same as when the container is ready to accept connections. We do a quick poll in a loop to make sure the databases are actually ready before starting our tests.

Rsync – make sure to delete unmatched files in the destination

Forgeting to add the --delete flag to rsync initially led to some confusion and weird test failures. Some files that were deleted in the source directory (developer’s local environments) were still present on their remote test directories due to an older test run. Thankfully, the solution was a simple flag to rsync. Adding this flag ensures that files that are not in the source get deleted from the destination.

Lessons learnt

Improving test execution times was certainly an investment for us, but it has payed off well saving us more than 50 hours during busy weeks. We did run into some weird issues with kernel limits as described above, tuning rsync to use a hack to create directories per user etc.

However, this helped us learn our stack really better and apply those learnings elsewhere too. With this baseline of work we were able to extend it for running Sandbox environments for our mobile clients running their own integration tests.