Orchestrating Node.js Containers with Kubernetes

This blog post follows up Containerizing Node.js Applications with Docker, where we covered what containers are, why organizations are finding them so useful in the modern software ecosystem, and best practices for turning Node.js applications into immutable containers with Docker.

The conversation doesn’t end at instantiating containers. If containers are enabling a microservice-based architecture, for example, how are the microservices communicating with each other? How are DevOps teams implementing incremental rollouts, managing processes within containers, and scaling up and down to stay in line with demand?

The answer: a container orchestration layer.

In this post, we will look at:

- Orchestration demands for simple and complex container architectures.

- An overview of container orchestration and Kubernetes.

- Networking in a containerized environment.

The Orchestration Layer

In a containerized architecture, the orchestration layer oversees container deployment, scaling, and management. The orchestration layer accomplishes:

- Scheduling of containers to physical/virtual machines, sometimes encompassing thousands of container-machine relationships.

- Restarting containers if they stop.

- Enabling container networking.

- Scaling containers and associated resources up and down as needed.

- Service discovery.

There has been a lot of buy-in from a range of IaaP and IaaS providers around container orchestration. Depending on how distributed your organization’s container architecture is, there are several options for orchestration that provide correspondingly complex (or simple) capabilities.

Orchestrating Simpler Architectures

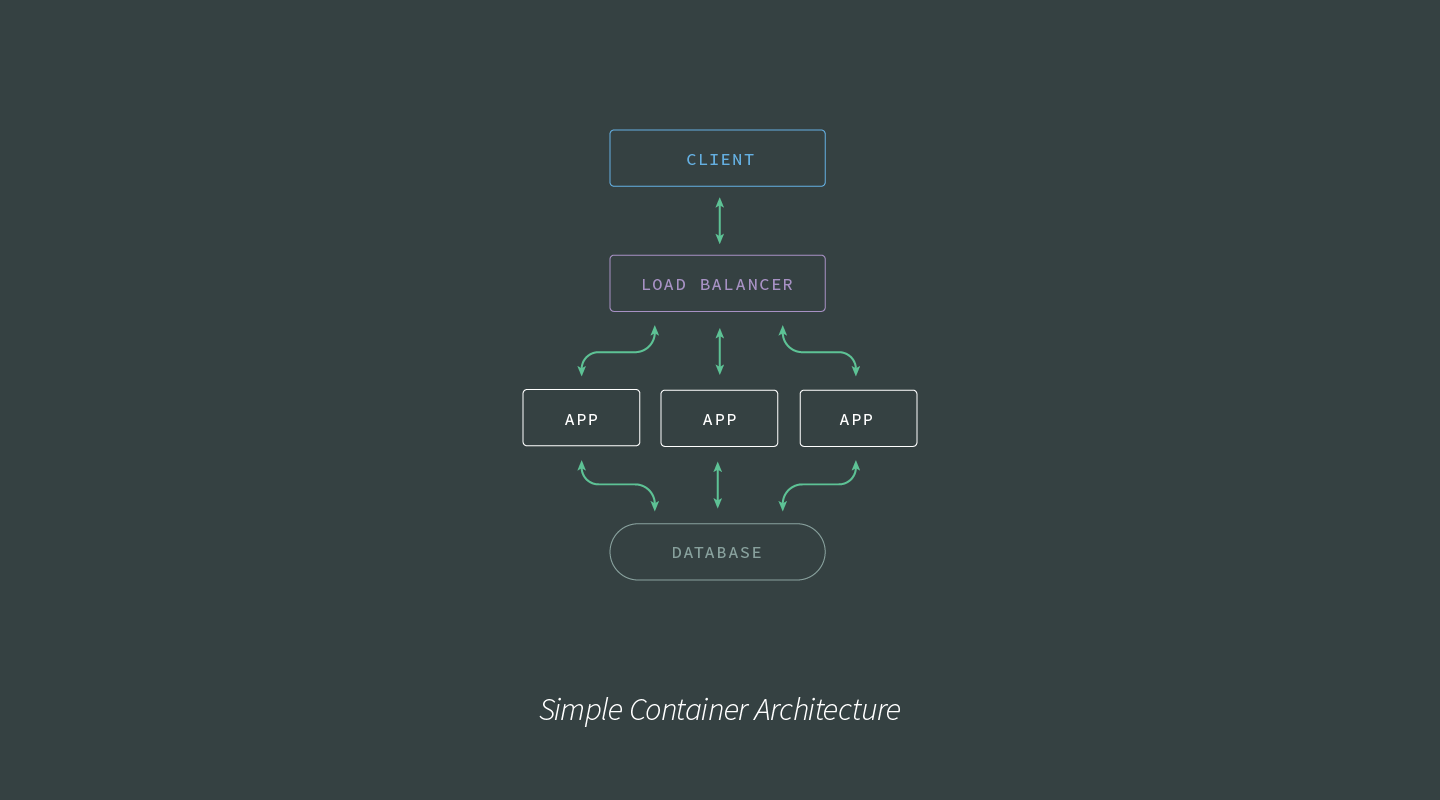

What’s a simple Node.js architecture? If your application is supported by just a few processes, one or two databases, a load balancer, a client, and exists on a single host—or something comparable to this scale—then your orchestration demands can most likely be met by Docker’s orchestration tooling.

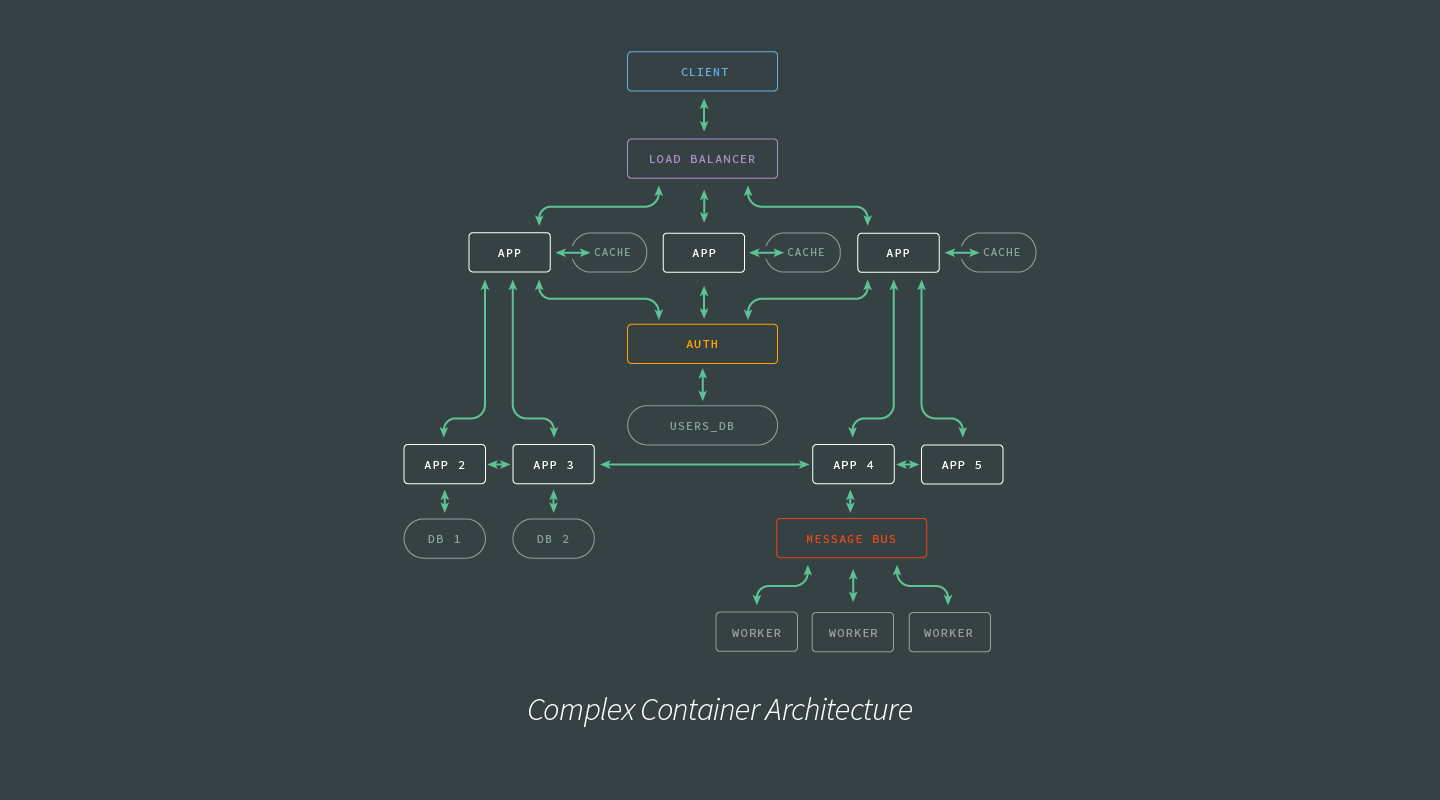

If, however, your container architecture is more in line with the following image, an orchestration solution such as Amazon ECS, Nomad, or Kubernetes is more suited to at-scale production demands. This post will focus on Kubernetes.

Container Orchestration with Kubernetes

Kubernetes Overview

Kubernetes (‘K8s’) is an open source system for automating and managing container orchestration that grew out of Google’s Borg, and is now maintained by the Cloud Native Computing Foundation.

With a smooth user experience focused on developers and DevOps engineers, and an impressive suite of orchestration features including automated rollouts and rollbacks, service discovery, load balancing, and secret and configuration management, Kubernetes has generated a lot of support in a short time. Integration with all of the major cloud providers keeps Kubernetes portable to a range of infrastructures.

Kubernetes Architecture

The master-node based architecture of Kubernetes lends it to rapid, horizontal scaling. Networking features help facilitate rapid communication between, to, and from the various elements of Kubernetes.

Here are the core components of the Kubernetes architecture:

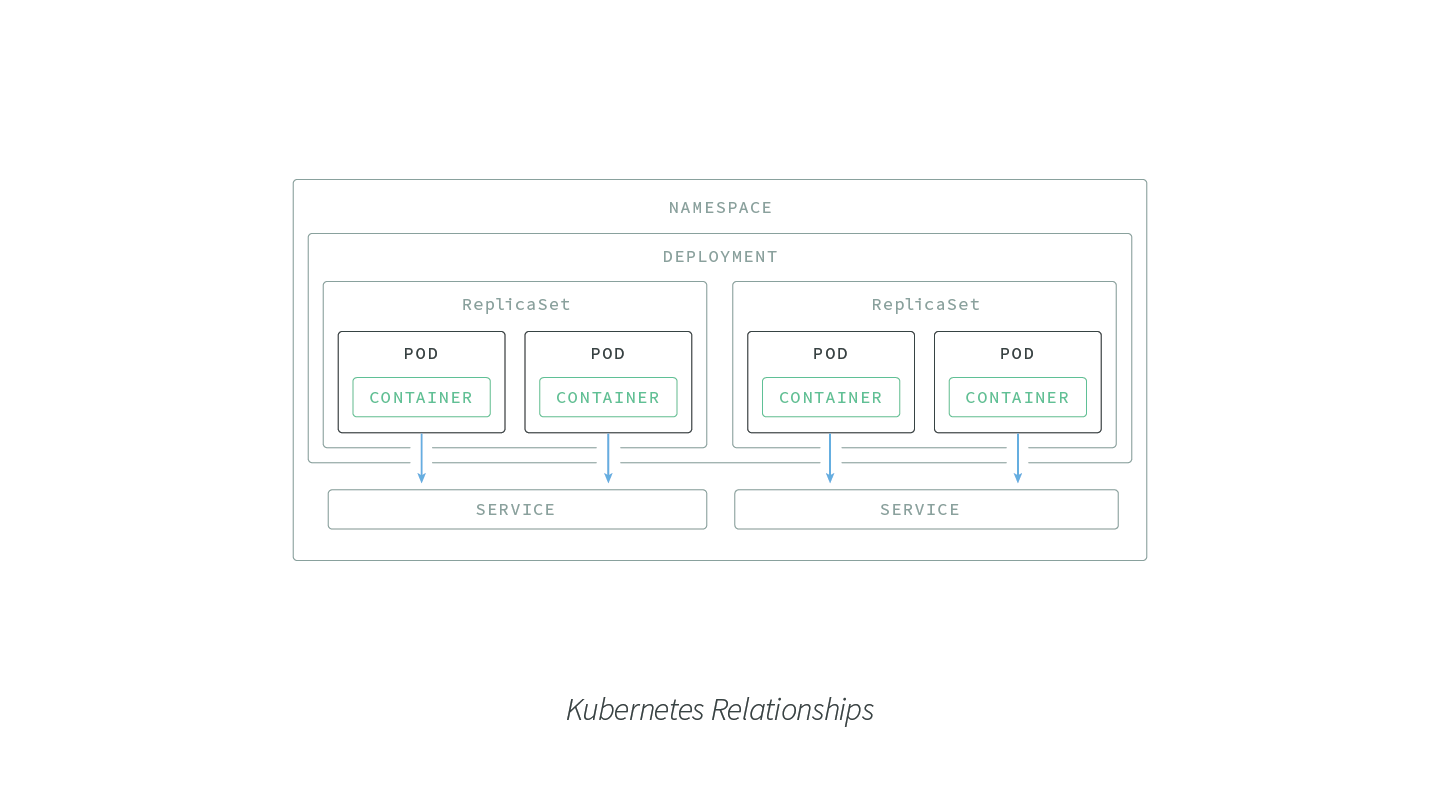

- Pod: The smallest deployable unit created and managed by Kubernetes, a Pod is a group of one or more containers. Containers within a Pod share an IP address and can access each other via localhost as well as enjoy shared access to volumes.

- Node: A worker machine in Kubernetes. May be a VM or a physical machine, and comes with services necessary to run Pods.

- Service: An abstraction which defines a logical set of Pods and a policy for accessing them. Assigns a fixed IP address to Pod replicas, allowing other Pods or Services to communicate with them.

- ReplicaSet: Ensures that a specified number of Pod replicas are running at any given time. K8s recommend using Deployments instead of directly manipulating ReplicaSet objects, unless you require custom update orchestration or don’t require updates at all.

- Deployment: A controller that provides declarative updates for Pods and ReplicaSets.

- Namespace: Virtual cluster backed by the same physical cluster. A way to divide cluster resources between multiple users, and a mechanism to attach authorization and policy to a subsection of a given cluster.

The following image provides a visual layout describing the various scopes of the Kubernetes components:

Labels and Selectors

Kubernetes has provided several features for differentiating between users and objects:

- Labels: Key/value pairs attached to objects (like a Pod) containing identifying metadata, such as release line, environment, and stack tier.

- Selectors: The core grouping primitive in Kubernetes. Label selectors enable grouping or managing of objects via their labels.

Labels, selectors, and namespaces are critical in enabling Kubernetes to be so flexible and dynamic in its configuration capabilities. Bear in mind that the label selectors of two controllers must not overlap within a namespace, otherwise there will be conflicts.

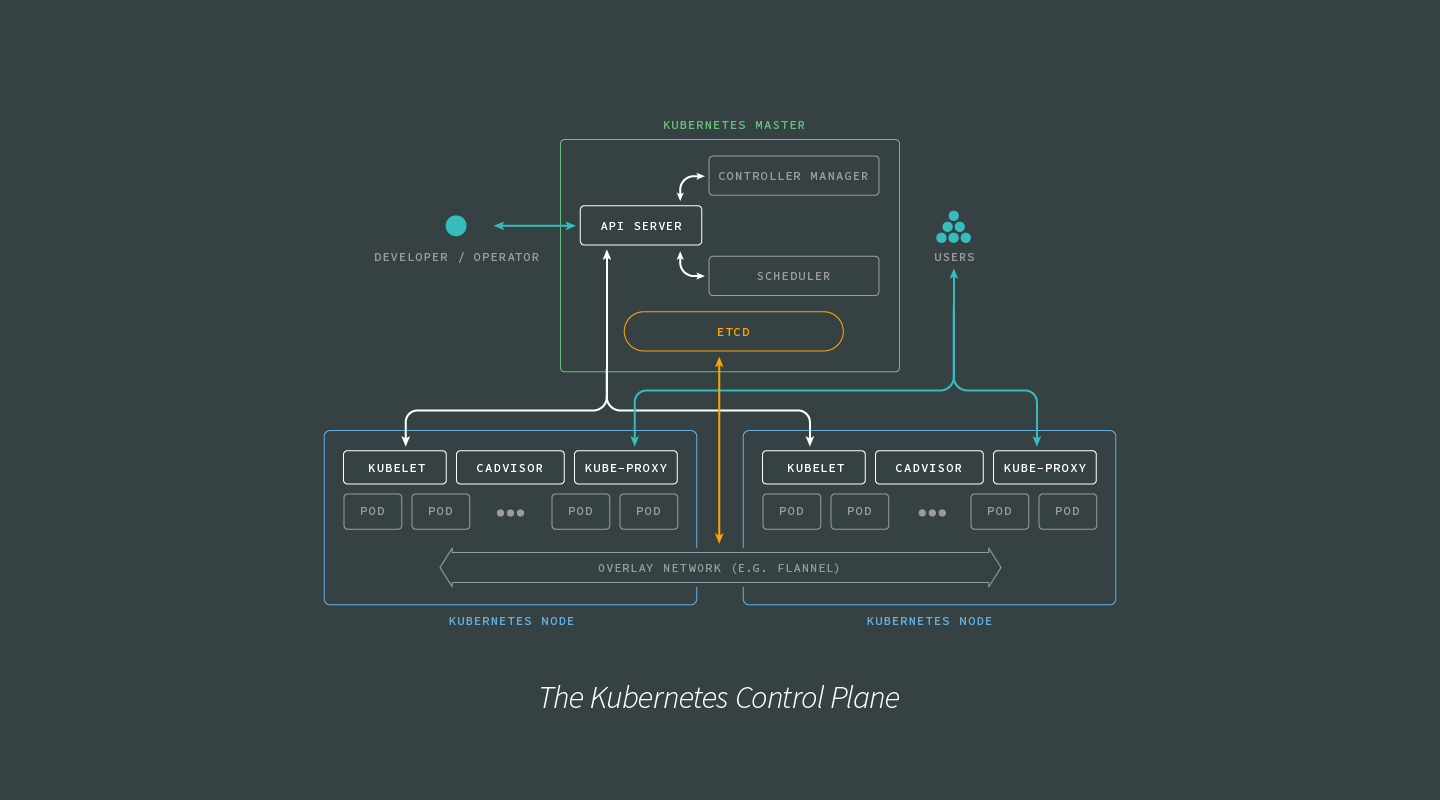

As Kubernetes itself is built on a distributed architecture, it excels at building and managing microservice and other distributed architectures. While burrowing into the details of the various services that power Kubernetes is outside the scope of this article, the following image shows a higher-level look at the interactions between the various elements of the Kubernetes Control Plane:

Keep the Control Plane information flows in mind as we look into how Kubernetes handles container networking.

Container Networking

Networking between containers is one of the more demanding software challenges in container orchestration. In this section we will look at how Docker handles container networking, how this approach limits Docker’s abilities to orchestrate containers at scale, and how the Kubernetes approach to networking challenges makes Kubernetes orchestration better suited for graceful, rapid scaling.

Networking the Docker Way

By default, Docker containers use host-private networking. To do this, Docker provisions a ‘virtual bridge,’ called docker0 by default, on the host with space for each container provisioned inside the bridge. To connect to the virtual bridge, Docker allocates each container a veth (virtual ethernet device), which is then mapped to appear as eth0in the container via network address translation (NAT). NAT is a method of mapping one IP address into another by modifying network address information in the IP headers of packets.

This presents a couple problems for DevOps:

First and most importantly, Docker containers networked via bridging can only talk to containers on the same machine or virtual bridge. This is okay for projects of limited scale with fairly narrow networking demands, but problematic once many hosts and machines are involved.

Secondly, the reliance on NAT can lead to a non-negligible hit on performance.

Networking the Kubernetes Way

Networking with Kubernetes is intended to be more performant and scalable than with the default Docker tooling. To make this possible, Kubernetes networking implementations must meet the following requirements:

- All containers can communicate with all other containers without NAT.

- All Nodes can communicate with all containers (and vice versa) without NAT.

- A container reference itself with the same IP address that other containers use to reference it.

When these conditions are met it becomes much easier to coordinate ports across multiple teams and developers. Software like Flannel, WeaveNet, and Calico provide well-supported Kubernetes networking implementations.

Summary

Coupled with Docker, Kubernetes presents an elegant solution to automating the management, deployment, and scaling of containerized Node.js applications. Highly portable and supported by all major cloud providers, Kubernetes is helping power the microservice-based architectures of modern software.

While it has many moving parts, Kubernetes’ design creates powerful points of abstraction that make features such as automated rollouts, rollbacks, and load balancing, as well as non-trivial networking demands like service discovery and container-container communication, configurable and predictable.

One Last Thing

If you’re interested in managing Node.js Docker containers, you may be interested in N|Solid. We work to make sure Docker and Kubernetes are first-class citizen for enterprise users of Node.js who need insight and assurance for their Node.js deployments.

If you’d like to tune into the world of Node.js, Docker, Kubernetes, and large-scale Node.js deployments, be sure to follow us at @NodeSource on Twitter.